A discussion with Prudence Malinki - AI in policy making, trademark abuse and public vs. proprietary uses of the technology.

by Kelly Hardy

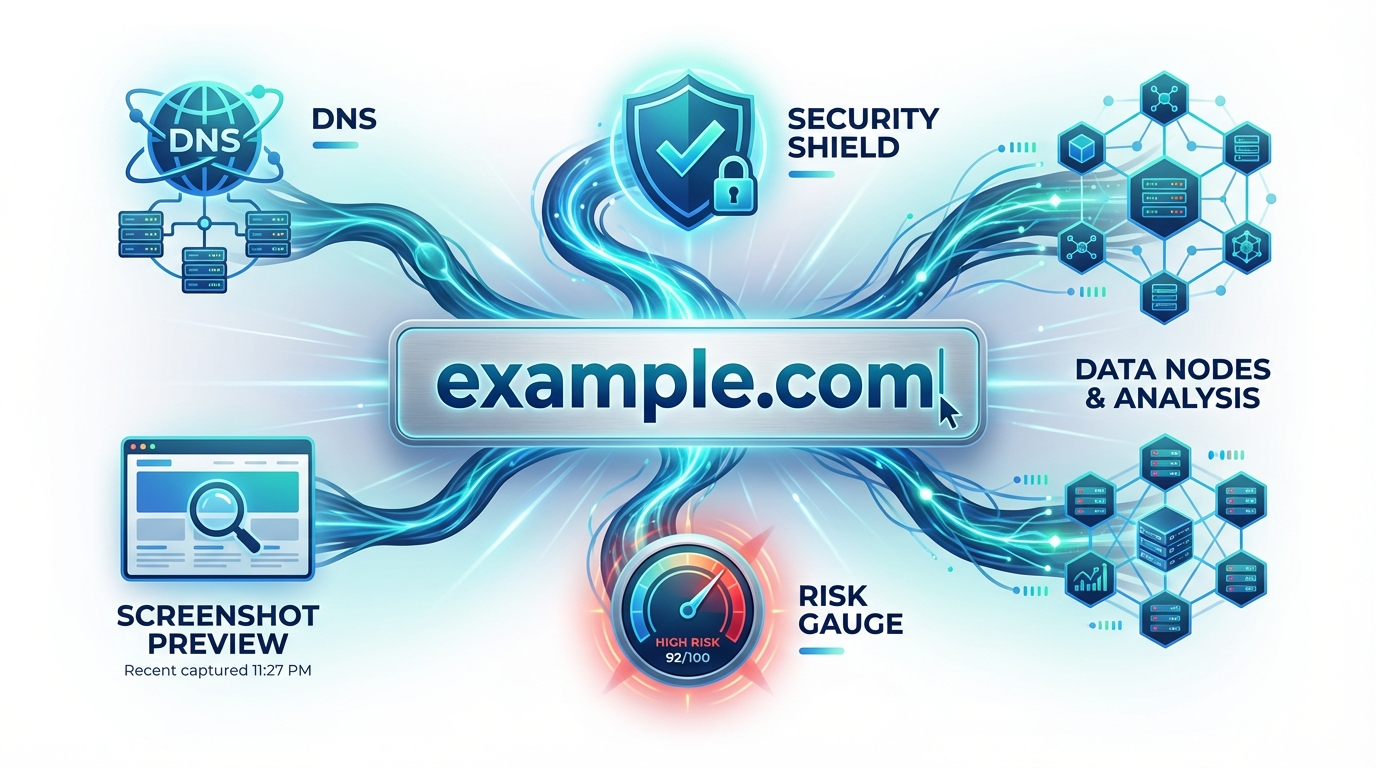

As AI is proliferating, forward thinking companies in the domain space are finding creative ways to harness the technology to enhance our businesses. From business intelligence tools to the detection of online harms we are in an exciting period of discovery. As we make this leap forward in innovation, we must also leap forward in intention and build trust by deploying AI in products developed with a strong ethical compass and maintaining a focus on good internet stewardship.

At the same time that some companies in the Internet Infrastructure industry are beginning to incorporate AI into their daily business, we are also seeing the beginning of international legislation that seeks to apply necessary guardrails to the technology as it develops. In concert with these regulations, individual companies applying AI to new and existing products must also develop policy language that governs their use of the medium as well as protecting the privacy of customers and users.

Prudence Malinki, Head of Industry Relations at MarkMonitor thinks deeply about this shift in technology. Known for her razor-sharp wit, Prudence shares her thoughts about AI in relation to policy making, trademark abuse and public vs. proprietary uses of the technology.

iQ: AI is changing the landscape for those of us who work "behind the scenes" of the internet. How does MarkMonitor view this development?

Prudence: The developments with AI are being observed with cautious optimism as we can see that there is great scope to improve, refine and optimise many elements of work and processes relating to the use and application of AI. Having already watched novel ways of integrating AI— from name generation, to trademark searches; we see this as innovative and exciting.

IQ: What do you think people are underestimating about the use of AI in domain abuse/trademark abuse/infringement?

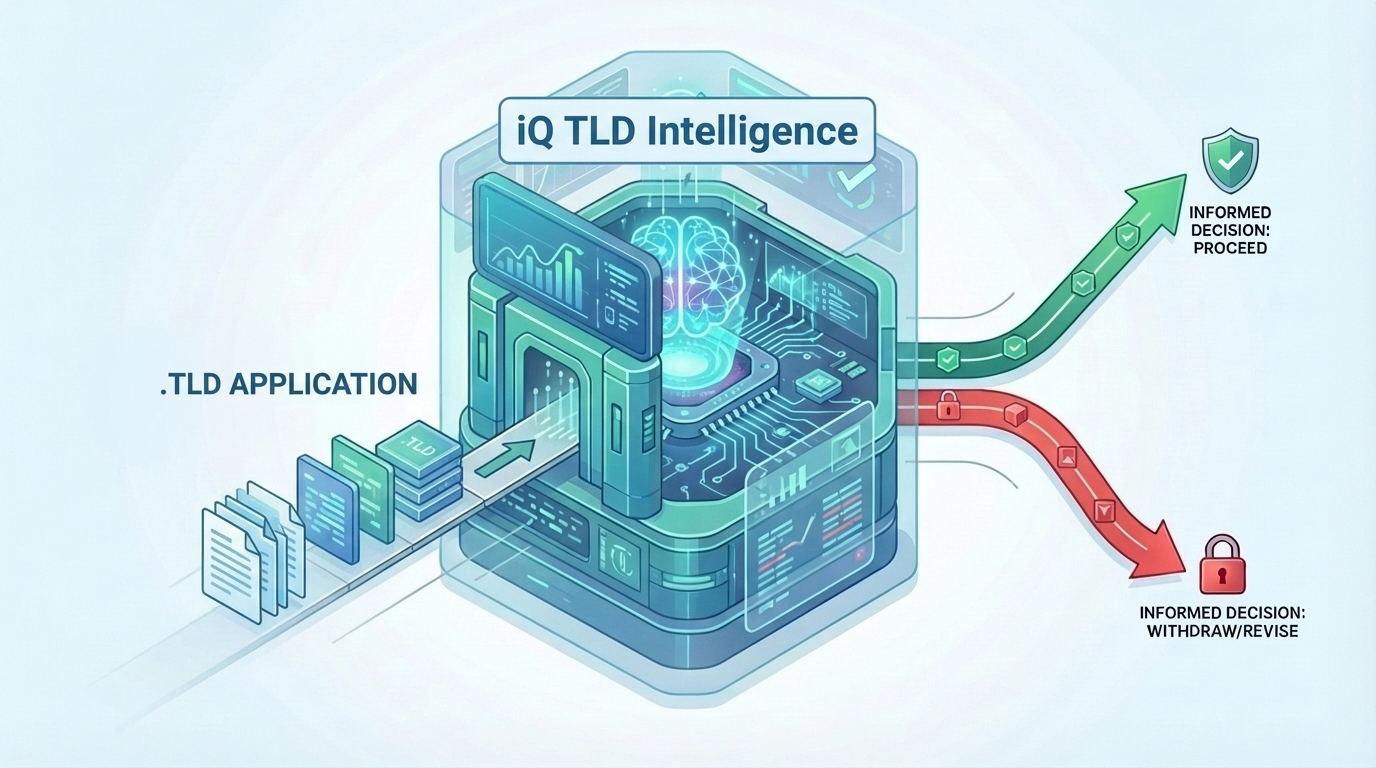

Prudence: There is a lot of scope for AI with regards to both sides of the coin. In both the drafting of high-level complaints for dispute resolution services such as UDRP to the creation of domain names for the infringement of Intellectual Property. Also, as this is such an emerging space, there remains to be further clarity required relating to copyright and infringement. There’s bound to be much more definitive case law created for generative AI output as this field grows more mature. But the scope for application with regards to its use in the fight against abuse, we are already seeing it being deployed and being an incredibly useful tool against online infringements.

IQ: What is your advice for others in our space who are looking to introduce policy and terms of use changes around AI?

Prudence: There are different courses of action as to whether the AI systems are internal tools or public external tools. If you have an internal tool, it’s advisable to immediately create a general policy to ensure that employees aren’t unwittingly sharing sensitive, proprietary information on publicly accessible AI systems where it can remain in the public domain. There is, however, a little more flexibility with creating use policies for internal tools that are created and owned by you, as that shall be more governed by business objectives and purposes for the creation of the tools. However, if there haven’t been any discussions at all relating to AI, there definitely should be taking place with high-level policies relating to employee use of third-party tools at the top of the agenda.

As with any emerging technology, Ai will impact the domain and internet infrastructure industries in both positive and negative ways. It is important that we think broadly and critically about how we use and legislate this technology in our own space.